In Part 1, Andy explained why we needed a better prerenderer. Now, he explains how he built our own.

How hard could it be?

Though I generally always feel daunted when I look at other people’s code, after looking through the prerenderers I found on GitHub, the basic premise seemed fairly simple. Essentially, a local server instance would be started up, with the docroot of the site being set as such on that instance. Then, a browser client/DOM library would be loaded up, navigate to some provided routes and request them from the server instance. Finally, the markup from the responses to those requests would be saved to a file.

As the build process for Vue is a Node one, remaining within the Node environment seemed sensible. Node already has well-established server capabilities, further extended by Express. So that’s our server. Many of the existing prerender libraries used Puppeteer, an API library that allows communication with a(n optionally) headless Chromium browser instance. Puppeteer has methods that allow you to navigate to a page, wait for specific elements to load before continuing the execution, and save the generated HTML. So that was the client sorted.

And writing the generated HTML back to the file system could be done using Node’s existing fs library, which is file creation taken care of.

Basically, the prerenderer needed to pull together the functionality of three different sets of libraries – a server, a client and a filesystem lib. The rest would just be configuration.

Our implementation: A tale of three classes

For those of you familiar with the British indie music scene in 2006, let’s Skip to the End. For everyone else, let’s preview the result and then look through how we got there.

What was a hypothetical project became an actual thing that Eco Web Hosting now uses. Here are the lines that call it in our build script:

const SpaPrerenderer = require('spa-prerenderer');

const prerenderer = new SpaPrerenderer({

staticDir: __dirname + '/../ewh-main-site/public_html/vue/dist',

routes: [

'/',

'/vps',

'/contact',

'/web-hosting',

'/status',

'/domain-names',

'/agreements',

'/agreements/cookie-policy',

'/agreements/fair-usage-policy',

'/agreements/privacy-policy',

'/agreements/security-and-data-processing',

'/agreements/terms-and-conditions',

'/agreements/third-party-processors',

'/reseller-hosting',

'/about',

'/managed-wordpress'

],

outputDir: __dirname + '/../ewh-main-site/public_html/vue/dist/',

waitForElement: '#app',

useHttps: true,

supressOutput: false,

reportPageErrors: true,

});

prerenderer.init();There are three statements over 28 lines. Statement #1 includes the prerenderer for use.

Statement #2 instantiates it with a configuration that provides an input directory, the routes to prerender, an output directory, the ID of the element the prerenderer should wait for the generation of before saving markup, whether or not to use HTTPS on the local server instance the site is served to the renderer from, whether or not output should be suppressed and whether or not errors from the input pages are reported.

Statement #3 triggers the prerendering.

What each class does

Since the prerenderer was initially planned for use only by ourselves, we knew that it would be used in an up-to-date Node.js environment, meaning I could use nice, clean post-ES6 syntax. Of course, I could have used Babel with that to transpile down for compatibility with older versions, but it seemed like needless bulk when there are few circumstances this would be run in a pre-ES6 environment.

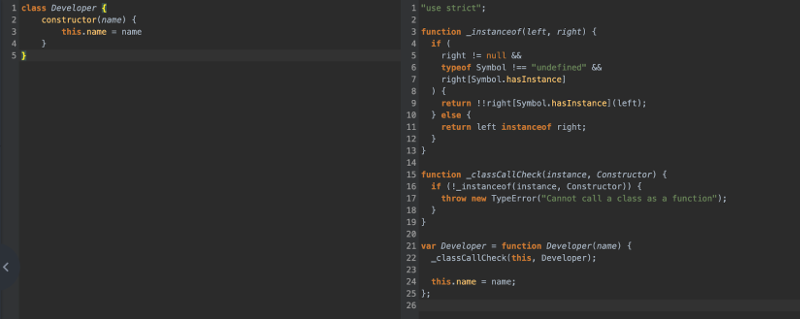

One post-ES6 syntax feature I used was the JavaScript class syntax, which provides a cleaner way of writing JavaScript’s prototype-based inheritance.

Here’s the difference that little bit of syntactic sugar can make to legibility:

I divided the project between three classes. Options (for validating prerenderer options), Server (the server) and Prerenderer (the client, containing the calls to Puppeteer).

Prerenderer would be the only class instantiated externally. Options and Server exist for Prerenderer to work with. The instance of Prerenderer would be constructed with the desired options, those options validated by a method of Options and an instance of Server started and stored as an instance variable.

Options just contains a static method to validate the object passed to it and throws an Error or TypeError in the event of an invalid value:

static validate({

staticDir,

routes,

outputDir,

waitForElement,

useHttps,

supressOutput,

reportPageErrors,

}) {

if (staticDir === null) {

throw new Error('staticDir must be explicitly set.');

}

if (!path.isAbsolute(staticDir)) {

throw new Error('staticDir must be an absolute path.');

}

if (!Array.isArray(routes)) {

throw new TypeError('routes must be an array.');

}

if (typeof useHttps !== 'boolean') {

throw new TypeError('useHttps must be a boolean value.');

}

if (typeof supressOutput !== 'boolean') {

throw new TypeError('supressOutput must be a boolean value.');

}

if (typeof reportPageErrors !== 'boolean') {

throw new TypeError('reportPageErrors must be a boolean value.');

}

return true;

}

}Server would be constructed with two of the options passed in from Prerenderer — staticDir, which is essentially the document root for the site, and useHttps, which is a boolean flag that dictates whether an http or https server is started. Both are passed in the server options object:

constructor(options) {

this._options = options;

this._expressServer = express();

this._nativeServer = null;

}Server essentially wraps an Express instance and augments it with a method to dynamically create a self-signed SSL certificate for it an https server is required. The server is then started using the init() method :

init() {

const server = this._expressServer;

server.use(express.static(this._options.staticDir, {

dotfiles: 'allow',

}));

server.get('*', (req, res) => res.sendFile(

path.join(this._options.staticDir, 'index.html')),

);

return new Promise((resolve) => {

const tlsParts = this.generateSelfSignedCert();

this._nativeServer = this._options.useHttps === true ?

https.createServer(

{

key: tlsParts.key,

cert: tlsParts.cert,

},

this._expressServer,

).listen(

this._options.server.port,

() => {

resolve();

},

) :

this._nativeServer = server.listen(

this._options.server.port,

() => {

resolve();

},

);

});

}This is how the server is then instantiated and initialised by the prerenderer constructor:

constructor({

staticDir = null,

routes = ['/'],

outputDir = '.',

waitForElement = null,

useHttps = true,

supressOutput = false,

reportPageErrors = false,

} = {}) {

Options.validate({

staticDir,

routes,

outputDir,

waitForElement,

useHttps,

supressOutput,

reportPageErrors,

});

this.routes = routes;

this.staticDir = staticDir;

this.outputDir = outputDir;

this.waitForElement = waitForElement;

this.useHttps = useHttps;

this.supressOutput = supressOutput;

this.reportPageErrors = reportPageErrors;

this.port = portfinder.getPort(3000);

const serverOptions = {

server: {port: this.port},

useHttps: useHttps,

staticDir: staticDir,

};

this.server = new Server(serverOptions);

this.server.init();

}Once a Prerenderer object has been successfully constructed, it should have a running server as a Server object instance variable. Prerendering can then begin.

Once the prerenderer is constructed

I divided the prerenderer’s functionality into three further instance methods. These are all called from the asynchronous init() method:

async init() {

await this.startBrowser();

await Promise.all(this.routes.map(async (route) => {

route = route.startsWith('/') ?

route :

`/${route}`;

const targetBase = this.outputDir.endsWith('/') ?

this.outputDir.slice(0, -1) :

this.outputDir;

const target = `${targetBase}${route}/index.html`;

if (this.supressOutput === false) {

console.info(chalk`{blue {bold Prerendering }${route}}`);

}

const content = await this.getMarkup(route);

if (this.supressOutput === false) {

console.info(

chalk`{green {bold Prerendered} ${route}} {bold.blue Saving…}`,

);

}

this.saveFile(content, target);

if (this.supressOutput === false) {

console.info(chalk`{green.inverse {bold Saved} to ${target}}`);

}

}));

await this.browser.close();

await this.server.destroy();

return true;

}The method first calls startBrowser(), which starts a Puppeteer instance and assigns it to the instance variable browser.

Next, await Promise.all() is used to run an instance of getMarkup() for each route in parallel, and then calls saveFile() after the markup for each respective route has been retrieved. Only when each of those asynchronous fetch/save routines is successful (resolves) or fails (is rejected) will init() move onto the next statements, which close the Puppeteer instance followed by the server.

Here’s how the three methods that init() calls work in a little more detail.

Starting Puppeteer with startBrowser()

startBrowser() fires up an instance of Puppeteer. Since Puppeteer will be accessing only our local Express server (which may have a self-signed certificate), the --ignore-certificate-errors is included to prevent Puppeteer complaining that the certificate isn’t signed by a recognised CA:

async startBrowser() {

try {

this.browser = await puppeteer.launch({

headless: true,

ignoreHTTPSErrors: true,

defaultViewport: null,

args: [

'--ignore-certificate-errors',

],

});

} catch (e) {

throw e;

}

}Getting the routes’ markup with getMarkup()

getMarkup() opens a new tab in the Puppeteer browser instance startBrowser() created for the route it is getting the markup for and then navigates to it.

If reportPageErrors() has been set to true, it outputs any errors from the source script if they exist. If the waitForElement option has been set, it waits up to 60 seconds for that HTML element to be generated.

Assuming no errors have been thrown, it then returns the markup:

async getMarkup(route) {

const s = this.useHttps === true ? 's' : '';

const url = `http${s}://localhost:${this.port}${route}`;

const page = await this.browser.newPage();

if (this.reportPageErrors === true) {

page.on('pageerror', (err) => {

const errorHint = this.waitForElement !== null ?

chalk`This may prevent the element {bold ${this.waitForElement}}` +

` from rendering, causing a timeout.` :

'';

console.error(

chalk`{bgRed.white {bold Error from the page being rendered:}}`,

);

console.error(chalk`{red ${err}}`);

console.error(chalk`{red ${errorHint}}`);

});

}

await page.goto(url, {timeout: 60000});

if (this.waitForElement !== null) {

await page.waitForSelector(this.waitForElement, {timeout: 60000});

}

return await page.content();

}Saving the routes’ markup using saveFile()

saveFile() takes content and saves it to a file path using Node’s fs library:

saveFile(fileContent, filePath) {

const pathComponents = filePath.split('/');

pathComponents.pop();

const dirPath = pathComponents.join('/');

if (!fs.existsSync(dirPath)) {

fs.mkdirSync(dirPath, {recursive: true});

}

if (fs.existsSync(filePath) && !fs.lstatSync(filePath).isFile()) {

fs.unlinkSync(filePath);

}

fs.writeFileSync(filePath, fileContent);

}Wonderful. In the context of the init() method that calls it, the file path will be whatever the outputDir value is, suffixed with ‘index.html’.

But will it blend?

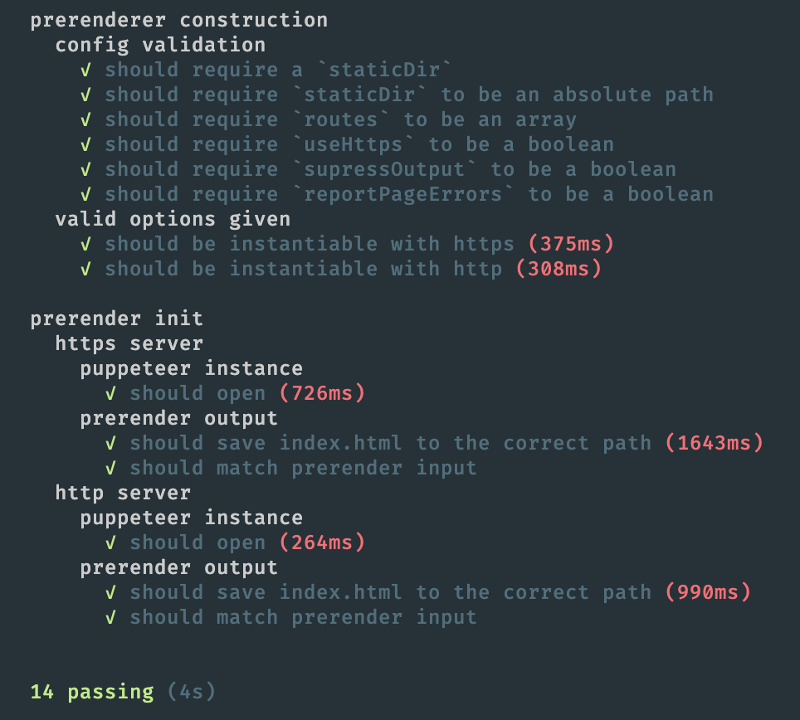

To make sure my initial enthusiasm hadn’t been pure folly, I thought perhaps it might be wise to write some tests.

I’m a great fan of code that reads like human being language, and for this reason, I used the Mocha test suite with the Chai assertion library, which allowed me to write tests like this:

describe('prerender output', async () => {

it('should save index.html to the correct path', async () => {

await httpPrerenderer.init();

fs.existsSync(`${outputDir}/index.html`)

.should.be.true;

});

it('should match prerender input', () => {

const output = fs.readFileSync(`${outputDir}/index.html`, 'utf8');

output.should.contain(

'<section id="dynamic"><h2>Dynamic bit</h2>',

'Actual output does not match expected output',

);

});

});The results were promising (though the tests were a bit slow due to the inherent overhead of DOM rendering in a headless browser via Puppeteer):

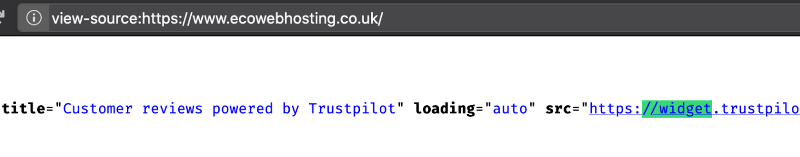

Hot diggity dog. And the http:// URL in the prerendered source?

Gone. Success!

Open sourcing the results

Writing a new prerenderer for the purpose of banishing a mixed content error on our own site is all well and good, but it felt a little selfish to keep it to ourselves. For this reason, I’ve published it on Eco Web Hosting’s brand new GitHub profile and as an npm package for the world to use (and scrutinise).

This is my (and Eco Web Hosting’s) first foray into publishing open source packages so please, install, test, fork, adapt, report upon! Contributions and feedback are more than welcome.

Note:

Looking back, I could have submitted a pull request in the original prerenderer plugin to add the missing https toggle feature, though before embarking on this project, the source code made little sense to me. Ironically, it’s only having completed my own version that I now see my efforts might have been better directed at contributing to the original. Hindsight is, as they say, 20/20.